This tutorials is intended to demonstrate the basic components of a Hidden Markov Model and how to apply them using this toolbox.

As a reminder, this HMM toolbox is intended for applications with discrete or categorical observations.

A tutorial on hidden Markov models and selected applications in speech recognition (1989) Rabiner

Tutorial-style paper for HMMs in neuroscience: Hidden Markov models for the stimulus-response relationships of multistate neural systems (2011) Escola et al.

Efficient modifications of HMM decoding: Short-time Viterbi for online HMM decoding (2008) Bloit & Rodet

for this tutorial we will call the latent, discrete-valued state \(q_t\) and the observed discrete-valued emission signal \(s_t\). In our applications \(s_t\) is often a vector of binary spike counts.

state transition probability:

\[ \left[ q_{t+1} \right] = \left[ \alpha \right] \left[ q_t \right] \]

spike emission probability:

\[ s_{t} = Bernoulli( \eta(q_t) ) \]

(note, while a Bernoulli emission process is shown here, this toolbox works with any probability mass function)

The parameters of the spiking Hidden Markov Model (HMM) which get estimated in the training phase are the transition probabilities between states

\[p(q_{k+1} = i | q_{k} = j) = a_{ij}\]

the spiking probabilities for each of the states

\[p(s_k = 1 | q_k = i) = \eta_i\]

and the initial distribution over hidden states

\[ p(q_{0} = i) = \pi_i \]

We'll call this collection of paramters

\[ \Theta_{HMM} = \{\pi, a, \eta\} \]

fitting parameters

Estimating the parameters requires a guess for the state sequence and estimating the state sequence requires a guess for the parameters. This problem is solved by an establish alternating estimation scheme known generally as expectation-maximization, or Baum-Welch specifically for Hidden Markov Models.

Currently parameter fitting is accomplished through MATLAB:

These parameters can then be loaded into the rtxi-hmmDecoder or rtxi-hmmGenerator modules as vectors of vectors in C++

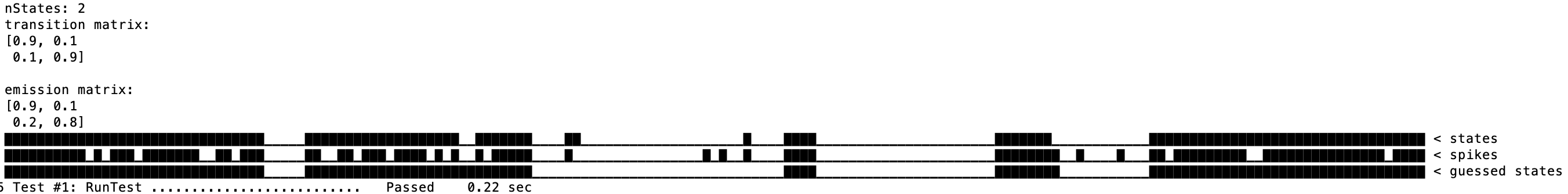

In order to simulate the Hidden Markov Model it is useful to generate a sequence of states ( \(q\)) and observations ( \(s\)) from a set of parameters ( \(\Theta_{HMM} = \{\pi, a, \eta\}\)).

(note genSeq is mostly useful in debugging, but is unlikely to be required in a final application of the decoder)

The other subtask for training the HMM is to estimate the sequence of state transitions given a guess for the parameters. \(p(q | s, \Theta_{HMM} = \{\pi, a, \eta\})\)

After the training phase, the parameters of the model are held fixed and states are estimated using the Viterbi algorithm, with a modification to discard old spiking dating which is no longer informative about the current state [REF BLOIT]

A key metric for evaluting the performance of HMM decoding is the accuracy which can be calculated simply as

\[\textrm{accuracy} = \frac{\# \textrm{correctly classified samples}}{ \textrm{total num. of samples}} \]